This article will discuss a type of architecture that allows real-time data processing while following a data governance strategy.

After briefly recalling some data governance principles and the main characteristics of a governance framework, we will look at a specific solution based on event-driven architectures (EDA). Finally, we will analyse and provide a simplified critique of the proposed architecture.

1. Data Governance

Definition

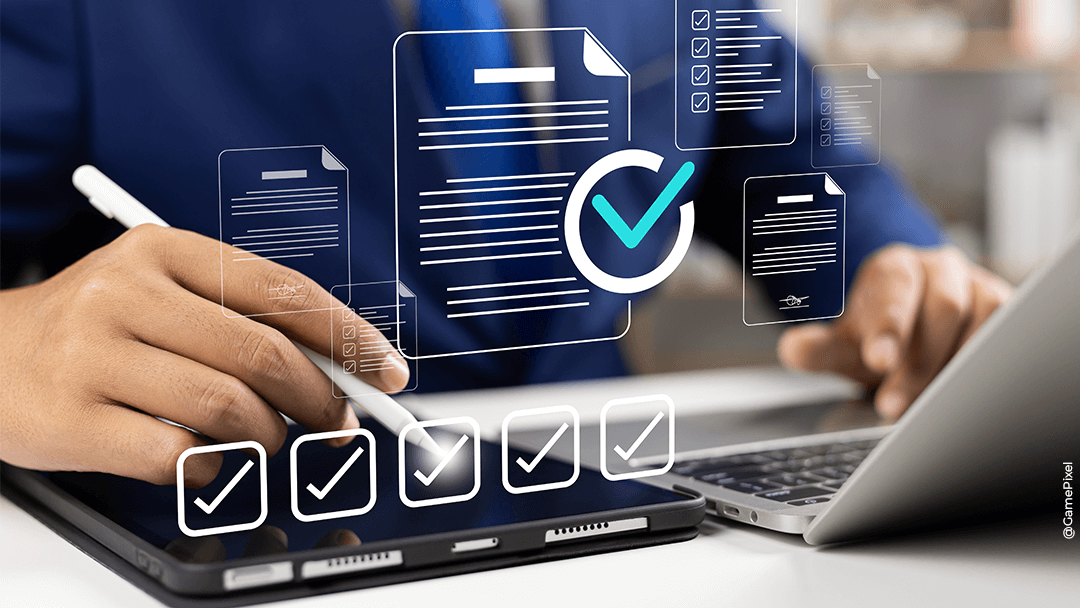

Data governance aims to ensure data quality and security within an organisation. It includes:

- Data availability

- Data control

- Data quality (consistency, integrity, veracity, etc.)

- Data integrity

- Datausability

- …

There are, therefore, two complementary axes when talking about governance: One focuses on data processing (including storage), and the other focuses on visualisation (making data available to users).

1 : this article will mainly focus on the first axis.

2 : here, we will focus on defining data management; the political aspects of the definition are considered to be irrelevant here.

Principles

Data governance is a protean concept. It often varies according to the approach it is part of—an organisation’s desire to comply with legislation, a department’s desire to rationalise its data processing processes, and more. However, some principles can be stated that are valid across the board, such as:

- Data must be identified (origin, owner, storage location, etc.)

- Data must be classified (typology, sensitivity, etc.)

- Data must be guaranteed (quality, integrity, availability, etc.)

- Data must be secure (security of information exchanged, security of processing, confidentiality, etc.)

- We must be able to identify how data is treated (e.g., data lineage)

From these characteristics, we can extract a framework for data governance.

we will not refer to the notion of data contract as defined by Microsoft in this article. However, this notion would be interesting as it would provide complementary elements relating to data governance.

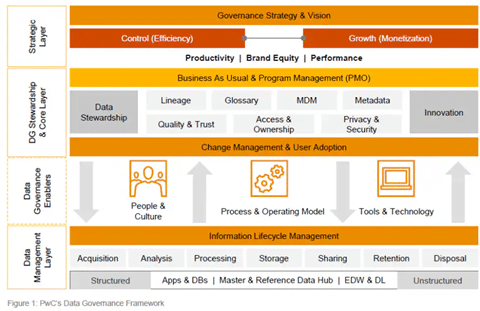

Governance Framework

A data governance framework creates a single set of rules and processes for data collection, storage, and use. A data governance framework:

- Streamlines data governance,

- Simplifies evolutions in governance

- Maintains compliance with internal policies and regulations

- Simplifies and supports collaboration

Ensures the global application of policies and management rules applied to data.

A governance framework must operate on several levels:

A data stewardship program is the operational implementation of a data governance program. Data managers perform the day-to-day work of administering the company’s data.

Proactive management of data quality in the company.

The formal process to define and document data assets company-wide.

Corporate management of critical data elements shared between data systems.

A defined and documented inventory of what data needs to be secured, who can access it, and how to secure it.

Data privacy is vital to risk identification and management. New legislation requires organisations to assess compliance efforts in handling customer data.

Data/information management from its creation to disposal. It establishes the company’s data retention and destruction standards, policies, and procedures.

Proposed architecture

Introduction

For this article, we will assume that we want to develop a system that operates in real-time. In particular, we want to:

- Meet the requirements defined in the data governance framework

- Identify the data (source, forms, etc.)

- Identify treatments and perform a summary lineage

- Secure the data concerning sensitivity

- Identify possible “leakage” linked to uncontrolled treatments.

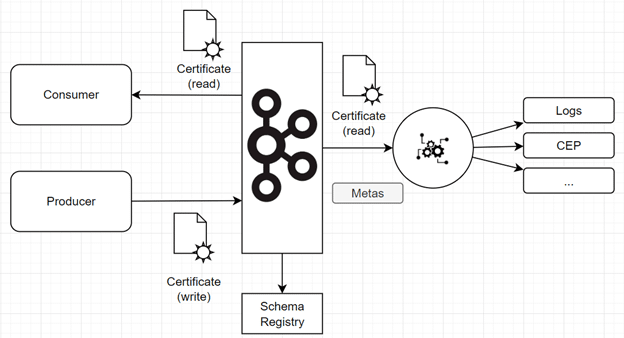

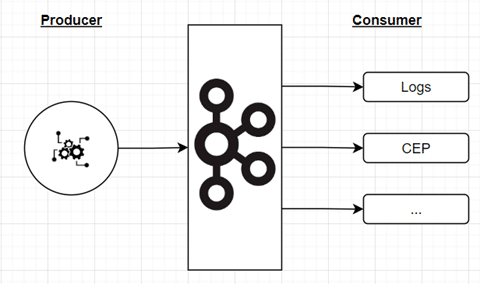

The idea here is to use the concepts of infrastructure as code—and, more generally, everything as code—and implement the framework’s rules within the system. Our system is based on a broker, and all data exchanged goes through it.

Principles

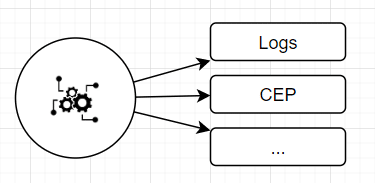

The architecture consists in adding an observer (right side of the diagram) on the set of available topics. This element will capture all the platform exchanges (called events). It will then apply treatments to:

- Observe thedata exchanges (tracing, telemetry, etc.)

- Verify that data exchanges are secure and compatible with governance rules

- Apply quality controls (format, volume, etc.)

- Detect governance rule violations (via Complex Event Processing approaches)

To do this, the system uses a certain amount of information:

- Event information

- Information from the channel where it is produced (topic information, certificates, etc.)

- Rules described in the system’s source code

- etc

Information | Description | Source | Note |

Application source | Describes the source application sending the data. | Producer certificates | The data catalogue adds meta information that is useful to the exchanges. This information is versioned in a VCS. |

Destination application | Describes the exchange’s destination application | Consumer certificate | The data catalogue adds meta information that is useful to the exchanges. This information is versioned in a VCS. |

Data type and context | Describes the context of the data, event type, data type, and possibly processing type | Topic | This information is managed and versioned by the schema registry. |

Data meta information | Provides some meta-information about the data. In particular, we will have information about the instance of the event | Event meta information | This information is managed and versioned by the schema registry. |

Data information | Provides information about the data transferred. | Event content | This information is managed and versioned by the schema registry. |

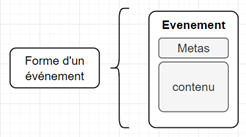

The form of an event is as follows:

Meta information corresponds to the event headers. They can give information and are standardised (*1).

The content of the message is also standardised. The format of each message is stored in the schema registry (*2). Only events compatible with the scheme defined in the schema registry can be sent to the topic. Any event that does not conform to the specification of the topic on which it is sent is rejected (*3).

These elements guarantee that metadata and data quality are managed correctly, in part performed through code review on the schema describing the data and the code describing the treatments applied to the data).

Using certificates and therefore securing exchanges, allows us to respond to problems linked to data confidentiality and some aspects of data security.

The registry schema manages the quality of data exchanged and, in part, data version lifecycles.

(*1). Messages that do not have all the required headers are blocked, and alerts are sent to the various stakeholders to inform them of the problem.

(*2). The message schemas are also versioned.

(*3). The producer receives an error when it tries to send events that do not match the schema.

Downstream treatments

In the proposed architecture, the system detects violations of the rules set out in the governance framework; it will not prohibit operations violating these rules.

These detections are made by processing data governance streams downstream of the observer.

Downstream from the broker, consumer side, a set of streams will implement the processing dedicated to data governance. These elements can be processing compositions performed via data streams using technologies such as Kafka Stream or Flink for example. The sinks of these treatments can be:

- BI reports,

- Control Dashboard,

- Databases,

- Alerts / notifications,

Using data sensitivity matrices provided to downstream processing can:

- Guarantee data confidentiality

- Guarantee exchange security by detecting data leaks

Reference data and data lifecycle management are achieved through:

- Diagrams describing the events,

- Rules in the code,

- A set of tools integrated into the treatment streams,

- Workflow, whose specifications are coded to automate processes such as deleting data once the retention period has expired,

- etc

Draft critique of the solution

Pros

The architecture is complex and requires that the user master a certain number of tools with a minimum of automation to free oneself from manual tasks that could become excessively time-consuming (e.g., certificate management).

It is essential to emphasise that the architecture lends itself very well to automatic and real-time decision-making. This provides an architecture that can easily evolve as the organisation matures.

Cons

The disadvantage of the proposed solution is that it does not prohibit exchanges if they violate governance rules. However, it can be adapted to prevent violations. It should also be noted that the use of streams can be expensive. The choice of technologies must be made carefully to control costs.

Many other criticisms of the proposed solution could be made, but we will not go into more detail for the sake of brevity.

Conclusion

The architecture presented here answers questions in terms of data governance: Once the governance framework has been defined, we can implement the rules in the system’s source code. The latter will be reviewed before being deployed and may be audited.

The proposed solution provides a system capable of handling real-time governance issues. By standardising the exchanges in the code, we have a system capable of observing itself and detecting or even prohibiting violations of specific governance rules.

Here we have dealt with the case of inter-application data flows. We did not mention direct data access by users as it would be the case, for example, when data is retrieved via web or mobile applications from REST APIs and JWT tokens. The OAuth2 protocol covers this aspect, and the proposed architecture has its equivalent for data issued by API handlers.